The term “MCP” is making waves in the AI world—and for good reason. It’s not just another tech acronym. MCP (Model-Context Protocol) is quietly transforming how AI systems interact with real-time data, tools, and services. Think of it as the missing link that lets AI move beyond static, pre-trained knowledge and into dynamic, real-world problem-solving.

In this post, we’ll break down what MCP really means (without the jargon), look at how it’s being used in practical, everyday AI systems, and explain why it’s becoming a go-to framework for building smarter, more flexible assistants.

By the end, you’ll understand why MCP is being called a game-changer—and how it’s helping AI models do more than just talk, but actually act with fresh, relevant context at their fingertips.

Table of Contents:

1.What is MCP

2.Real World Example of MCP

3.MCP vs Ai Agent

4.GTWY & MCP

What is MCP? A Smarter Way to Connect AI Assistants with Real-World Tools

MCP (Machine Control Protocol) is a powerful, open-source communication bridge that allows AI assistants to do more than just answer questions—they can take real action. Think of it as a two-way link between your AI and the tools your business already uses, like your CRM, Slack, dev server, or internal dashboards.

With MCP, your AI assistant doesn’t just access information—it becomes context-aware and proactive. It can pull live data, update records, send messages, or even trigger deployments—all securely and seamlessly.

Originally developed by Anthropic, the creators of Claude, This growing support means that MCP is fast becoming a standard for building smarter, action-oriented AI experiences.

Real-World Examples of MCP in Action

In real-world use, MCP (Multi-Connector Protocol) shines in situations where an AI assistant needs real-time information from internal systems or cloud-based tools. Here are a few practical examples:

1. Financial Analysis

Imagine a financial analyst using an AI assistant to assess investments. By running an MCP server that hooks into live market feeds or proprietary databases, the assistant can instantly fetch up-to-date data. Instead of writing any new code, the analyst can simply ask, “What’s the latest price for commodity X?” and the MCP server pulls in the current numbers.

2. Software Development

In coding environments like Zed, Replit, or Sourcegraph, MCP can power smart assistants that understand the codebase in real-time. For instance, when a developer asks, “Where is the function foo used?”, the assistant can query an MCP-connected server that taps into GitHub or the local file system—and return relevant snippets right away.

3.Enterprise Support

Companies can use MCP to connect AI assistants to internal systems like CRMs, knowledge bases, and document repositories. Take Block, for example—a financial services company that uses MCP to let its internal chatbot safely access documents and customer records. The assistant can retrieve what it needs without ever exposing raw data to the model.

MCP vs. AI Agents: Understanding Their Roles, Strengths & How They Work Together

In the world of modern AI, two terms often pop up in technical conversations: MCP and AI Agents. While they might sound similar at first, they serve very different purposes—but together, they make AI systems a lot more powerful.

What’s the Difference Between MCP and an AI Agent?

Think of an AI Agent as the “brain” — it thinks, plans, and makes decisions. It uses models like GPT or Claude to solve tasks step-by-step, often using tools, memory, or past experiences to figure out the best solution.

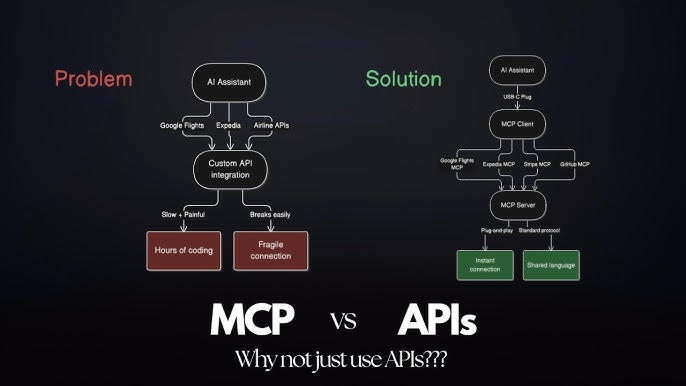

On the other hand, MCP (Model-Context Protocol) is like a smart data plug. It’s not a model, and it doesn’t “think.” Instead, it helps AI agents connect to live data sources, tools, or APIs — kind of like giving the brain access to the internet or a company’s database.

Why MCP is Good

One standard to rule them all: Instead of building 10 custom integrations, you just build one MCP server—and any AI can use it.

Switch models easily: Want to go from OpenAI to another LLM? No need to rewrite tool access. MCP handles it.

Security-friendly: Data stays on your own servers. The AI only sees what the MCP server allows.

Faster dev cycles: Just plug in new servers and test them without changing your AI’s code.

Why AI Agents Are Great Too

Multi-agent teamwork: Some systems let agents collaborate (e.g. one agent researches, another acts).

Tool-flexible: Agents can use almost any method (APIs, functions, scripts) to get things done.

MCP + AI Agent = The Power Duo

MCP (Model-Context Protocol) and AI agents aren’t competing technologies—they’re complementary components of a modern AI system. While AI agents are responsible for making decisions, planning tasks, and executing multi-step reasoning, MCP serves as the communication layer that connects those agents to live data and external tools. The agent decides what needs to be done, and MCP handles how to access the relevant resources to make that action possible. Instead of building custom integrations or writing new code for every data source or service, MCP offers a standardized, model-agnostic way for agents to interact with a wide range of systems.

This setup eliminates the need for hardcoded plugins or one-off scripts and replaces them with a flexible, reusable protocol. That means any AI—regardless of the underlying model—can retrieve real-time context from any MCP-connected source, without modifying its reasoning logic. It streamlines workflows, increases interoperability, and significantly reduces development time. By separating the roles of “thinking” and “fetching,” MCP and AI agents together create a scalable and efficient architecture that brings both intelligence and context-awareness to AI-driven applications.

GTWY & MCP – The Backbone of Smart AI Workflow

As AI systems become more integrated into business operations, two important technologies are making it easier (and safer) to scale: GTWY (Gateway) and MCP (Model-Context Protocol). While they serve different roles, together they unlock powerful, secure, and flexible AI experiences for both developers and end users.

GTWY: The Control Tower of Your AI Ecosystem

GTWY (as in

GTWY.ai

) is a no-code platform designed to help you build AI-powered workflows, automate business processes, and seamlessly integrate with your favorite tools—all without writing a single line of code. Think of GTWY as your AI system’s mission control. It empowers you to configure what your AI can access, how it interacts with different applications like Slack, Notion, CRMs, and more, and what logical steps it should follow throughout the workflow.What makes GTWY especially powerful is its user-friendly, visual approach to AI development. With intuitive building blocks—such as forms, buttons, decision trees, and database integrations—you can craft complex AI experiences much like assembling LEGO pieces. GTWY also takes care of orchestration, allowing you to connect APIs, databases, or third-party services with ease. From designing smart prompt flows to deploying scalable AI agents or assistants, GTWY offers a structured, secure, and highly flexible environment. It ensures governance and access control, so your AI systems operate safely and according to your organization’s needs.

MCP: The Translator Between AI and Your Data

On the other hand, MCP—or Model-Context Protocol—is not an app you interact with directly, but rather a background communication layer that plays a critical role in how AI systems access data. Think of MCP as a universal translator that allows AI models (like those from OpenAI, Anthropic, or open-source frameworks) to request and retrieve real-time information from external tools, databases, or files—without requiring direct access to them.

MCP provides a standardized way for AI to ask for context and receive structured, up-to-date responses. Whether it’s fetching the latest sales data, looking up customer records, or pulling in documentation, MCP makes sure the AI model receives clean and usable information. This protocol works across platforms and tools, meaning once you connect a system to an MCP server, any compatible AI client can access it. It’s highly model-agnostic, improves security by ensuring data stays within your infrastructure, and supports scalable integration by decoupling tool access from the AI logic itself.

GTWY + MCP: Better Together

While GTWY and MCP are very different in what they offer, they work in perfect harmony when it comes to building intelligent, responsive, and secure AI applications. GTWY serves as the design and orchestration layer—it defines what tasks the AI can perform, how it interacts with users, and how different services are coordinated within a workflow. MCP, meanwhile, ensures that the AI has real-time access to the information it needs to reason, plan, and act effectively.

Together, they eliminate the complexity of building AI applications from scratch. GTWY gives you the tools to visually build and deploy your workflows, while MCP ensures that your AI agents always have the most relevant and timely context at their fingertips. This combination leads to faster development, better decision-making, and seamless scalability across tools, teams, and models. In short, GTWY builds the brain’s environment—and MCP feeds it the knowledge to thrive.